In addition to the DEA user interface, there are times when direct access to the texts is needed. For this purpose, Digilab has adopted a separate environment, which allows direct access to the raw texts. It enables working with R code, downloading data, saving analysis results to your computer, and sharing them with others. When using and reusing texts, it is essential to observe the license conditions.

Access to files is supported by the R package digar.txts. With a few simple commands, it allows you to:

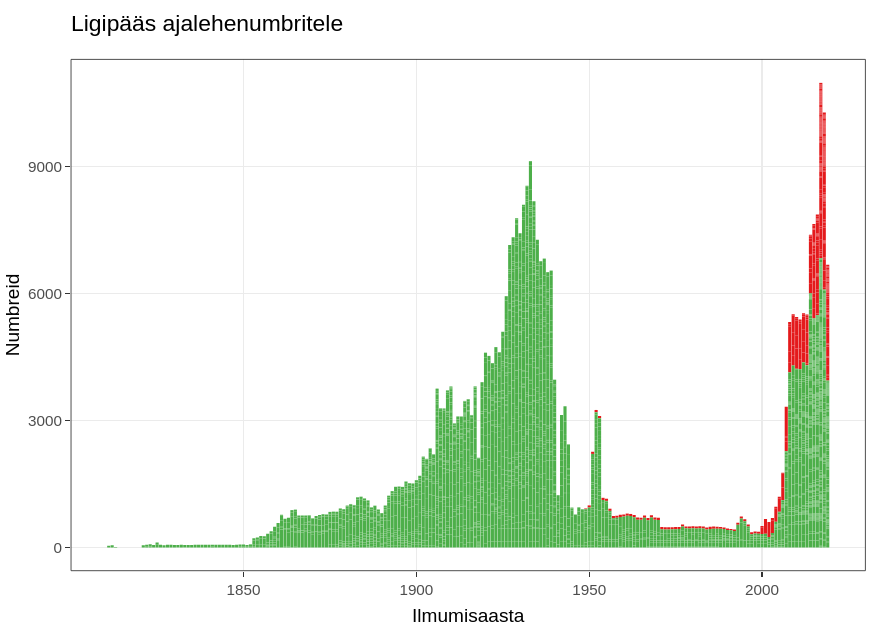

1) Get an overview of the dataset with file associations

2) Create subsets of the dataset

3) Perform text searches

4) Extract immediate context from search results

You can also save search results as a table and continue working with a smaller subset of data elsewhere.

Here are the commands:

These commands allow you to explore the collection, obtain specific subsets of data, conduct searches, and extract relevant information for further analysis.

For intermediate processing, various R packages and commands are suitable. For processing in Python, the data should be collected and a new Python notebook should be created beforehand.

suppressPackageStartupMessages(library(tidyverse,lib.loc="/gpfs/space/projects/digar_txt/R/4.3/"))

suppressPackageStartupMessages(library(tidytext,lib.loc="/gpfs/space/projects/digar_txt/R/4.3/"))suppressPackageStartupMessages(library(digar.txts,lib.loc="/gpfs/space/projects/digar_txt/R/4.3/"))all_issues <- get_digar_overview()library(tidyverse)

subset <- all_issues %>%

filter(DocumentType=="NEWSPAPER") %>%

filter(year>1880&year<1940) %>%

filter(keyid=="postimeesew")

subset_meta <- get_subset_meta(subset)

#potentially write to file, for easier access if returning to it

#readr::write_tsv(subset_meta,"subset_meta_postimeesew1.tsv")

#subset_meta <- readr::read_tsv("subset_meta_postimeesew1.tsv")do_subset_search(searchterm="lurich", searchfile="lurich1.txt",subset)texts <- fread("lurich1.txt",header=F)[,.(id=V1,txt=V2)]concs <- get_concordances(searchterm="[Ll]urich",texts=texts,before=30,after=30,txt="txt",id="id")subset2 <- all_issues %>%

filter(DocumentType=="NEWSPAPER") %>%

filter(year>1951&year<2002) %>%

filter(keyid=="stockholmstid")

# The subset2 from stockholstid has 0 issues with section-level data, but 2178 issues with page-level data. In this case pages should be used. When combining sources with page and section sources, custom combinations can be made based on the question at hand. Note that pages data includes also the sections data when available, so using both at the same time can bias the results.

# subset2 %>% filter(sections_exist==T) %>% nrow()

# subset2 %>% filter(pages_exist==T) %>% nrow()

subset_meta2 <- get_subset_meta(subset2, source="pages")

do_subset_search(searchterm="eesti", searchfile="eesti1.txt",subset2, source="pages")Convenience suggestion: to use ctrl-shift-m to make %>% function in the JupyterLab as in RStudio, add this code in Settings -> Advanced Settings Editor… -> Keyboard Shortcuts, on the left in the User Preferences box.

{

"shortcuts": [

{

"command": "notebook:replace-selection",

"selector": ".jp-Notebook",

"keys": ["Ctrl Shift M"],

"args": {"text": '%>% '}

}

]

}National Library of Estonia

Tõnismägi 2, 10122 Tallinn

+372 630 7100

info@rara.ee

rara.ee/en